Abstract

We propose an approach for optimizing high-quality clothed human body shapes in minutes, using multi-view posed images.

While traditional neural rendering methods struggle to disentangle geometry and appearance using only rendering loss, and are computationally intensive, our method uses a mesh-based patch warping technique to ensure multi-view photometric consistency, and sphere harmonics (SH) illumination to refine geometric details efficiently.

We employ oriented point clouds' shape representation and SH shading, which significantly reduces optimization and rendering times compared to implicit methods.

Our approach has demonstrated promising results on both synthetic and real-world datasets, making it an effective solution for rapidly generating high-quality human body shapes.

Method

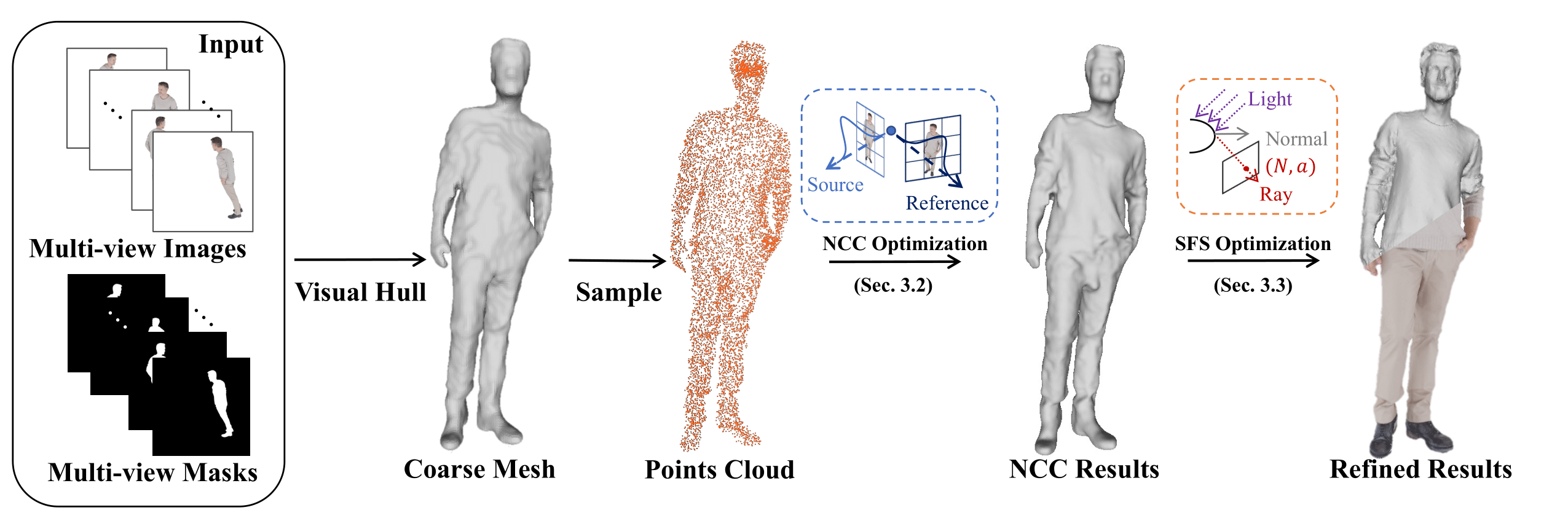

We first employ visual hull to obtain the initial mesh. Then we sample the oriented point clouds as optimization target. The oriented point clouds are optimized by multi-view patch-based photometric constraints. Moreover, we fix the mesh topology and employ a shape from shading refinement to refine the coarse mesh and recover the albedo.

Reconstruction Results

Results on NHR dataset

BibTeX

@inproceedings{lin2024fasthuman,

title={FastHuman: Reconstructing High-Quality Clothed Human in Minutes},

author={Lixiang Lin and Songyou Peng and Qijun Gan and Jianke Zhu},

year={2024},

booktitle={International Conference on 3D Vision, 3DV},

}